This

week,

I

have

been

experimenting

with

Deep

Research,

the

AI

agent

OpenAI

released

on

Sunday

that

it

says

is

capable

of

completing

multi-step

research

tasks

and

synthesizing

large

amounts

of

online

information.

Not

to

be

confused

with

the

controversial

Chinese

AI

product

DeepSeek),

Deep

Research

is

said

to

be

particularly

useful

for

people

in

fields

such

as

finance,

science

and

law.

Already

this

week,

I

published

two

of

these

experiments.

In

the

first,

I

used

it

to

analyze

the

legality

of

President

Trump’s

pause

of

federal

grants.

In

about

10

minutes,

it

produced

a

9,000

word

detailed

memorandum,

concluding

that

the

pause

“appears

to

rest

on

shaky

legal

ground.”

Next,

I

used

it

to

research

and

recommend

the

best

law

practice

management

suite

for

a

four-lawyer

firm.

It

produced

a

fairly

detailed

response,

including

two

charts

comparing

features,

pricing,

usability,

security,

support

and

user

satisfaction.

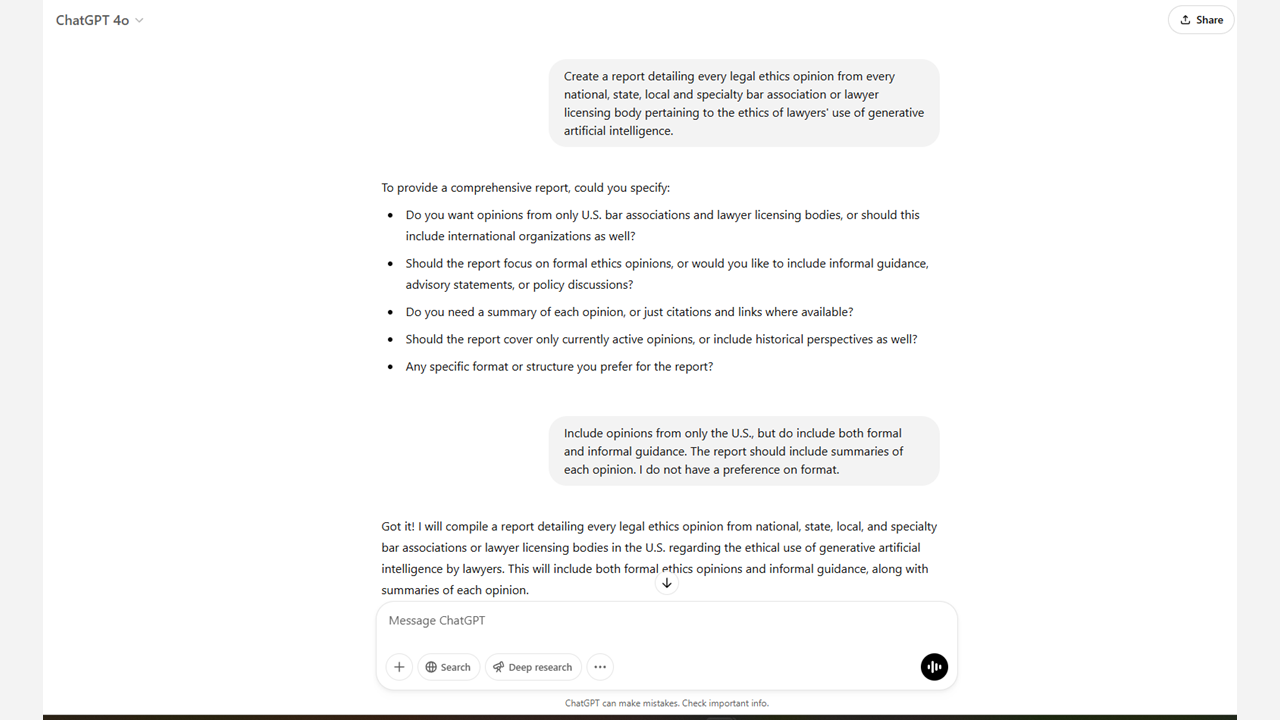

For

today’s

task,

I

asked

it

to

create

a

report

detailing

every

legal

ethics

opinion

pertaining

to

generative

AI.

Here

was

my

exact

prompt:

“Create

a

report

detailing

every

legal

ethics

opinion

from

every

national,

state,

local

and

specialty

bar

association

or

lawyer

licensing

body

pertaining

to

the

ethics

of

lawyers’

use

of

generative

artificial

intelligence.”

It

responded

to

my

prompt

with

several

questions

about

the

scope

of

the

research

I’d

requested,

such

as

whether

it

should

focus

only

on

formal

ethics

opinions

or

also

include

informal

guidance.

After

I

answered

its

questions,

it

produced

the

report

published

below.

After

it

produced

the

report,

I

asked

it

to

also

summarize

the

findings

in

a

chart,

which

is

what

you

see

immediately

below.

I

have

not

verified

that

this

is

a

complete

list.

If

anyone

knows

where

I

can

find

a

complete

list

to

compare

against,

please

let

me

know.

That

said,

I

was

again

impressed

by

its

ability

to

conduct

comprehensive

research

across

multiple

sources

and

generate

a

report.

The

entire

task

took

it

15

minutes.

|

Issuing Body |

Opinion Title/Number |

Date Issued |

Key Themes |

Disclosure Required |

Billing Guidance |

|---|---|---|---|---|---|

|

ABA |

Formal Opinion 512 |

July 2024 |

Competence, Confidentiality, Supervision, Candor, Fees |

Case-dependent |

AI efficiency should reduce fees |

|

California |

Practical Guidance |

November 2023 |

Confidentiality, Competence, AI Disclosure, Supervision |

Not mandatory, but recommended |

Efficiency gains must be fair to clients |

|

Florida |

Advisory Opinion 24-1 |

January 2024 |

Confidentiality, Supervision, Fees, AI Transparency |

Client informed consent advised |

Must not charge for AI time savings |

|

New York State Bar |

Task Force Report |

April 2024 |

Competence, Bias, AI Oversight, Client Communication |

Recommended in certain cases |

Clients should benefit from AI-driven efficiency |

|

New York City Bar |

Formal Opinion 2024-5 |

August 2024 |

Confidentiality, Competence, Supervision, AI Disclosure |

Required for external AI use |

No overcharging for AI use |

|

New Jersey Supreme Court |

Preliminary Guidelines |

January 2024 |

Competence, Candor, Supervision, AI Verification |

Not always, but recommended |

No billing for time not actually spent |

|

Pennsylvania & Philadelphia Bars |

Joint Opinion 2024-200 |

June 2024 |

Competence, Candor, AI Hallucinations, Billing Ethics |

Yes, in some AI use cases |

Cannot bill AI time as manual work |

|

Kentucky |

Ethics Opinion KBA E-457 |

March 2024 |

Competence, AI Use in Billing, Supervision, Client Consent |

Depends on AI’s impact on case |

Cannot bill for AI learning time |

|

North Carolina |

Formal Ethics Opinion 2024-1 |

November 2024 |

Competence, Supervision, Confidentiality, AI Oversight |

Not always, but must protect confidentiality |

AI-based efficiency should lower costs |

|

Texas |

Proposed Opinion 2024-6 |

November 2024 |

Competence, Confidentiality, AI Trustworthiness, Supervision |

Not explicitly required |

Fair pricing required for AI use |

|

Virginia |

AI Guidance Update |

August 2024 |

Confidentiality, Billing, Supervision, AI Court Compliance |

Not mandated but recommended |

AI costs must align with ethical billing |

|

D.C. Bar |

Ethics Opinion 388 |

September 2024 |

Competence, AI Verification, Supervision, Client Files |

Required in specific situations |

No excess fees for AI use |

|

USPTO |

Practice Guidance (2023–2024) |

April 2024 |

Candor, Confidentiality, AI Use in Legal Submissions |

Court compliance required |

Legal AI use cannot inflate costs |

National

Bar

Associations

American

Bar

Association

–

Formal

Opinion

512

(July

2024)

The

ABA

Standing

Committee

on

Ethics

and

Professional

Responsibility

issued

Formal

Opinion

512,

“Generative

Artificial

Intelligence

Tools,”

on

July

29,

2024.

americanbar.org

americanbar.org.

This

is

the

ABA’s

first

ethics

guidance

focused

on

generative

AI

use

by

lawyers.

It

instructs

attorneys

to

“fully

consider

their

applicable

ethical

obligations,

including

their

duties

to

provide

competent

legal

representation,

to

protect

client

information,

to

communicate

with

clients,

to

supervise

their

employees

and

agents,

to

advance

only

meritorious

claims

and

contentions,

to

ensure

candor

toward

the

tribunal,

and

to

charge

reasonable

fees.”

jenkinslaw.org

In

short,

existing

ABA

Model

Rules

apply

to

AI

just

as

they

do

to

any

technology.

Key

concerns

and

recommendations:

The

opinion

emphasizes

that

lawyers

must

maintain

technological

competence

–

understanding

the

benefits

and

risks

of

AI

tools

they

use

jenkinslaw.org.

It

notes

the

duty

of

confidentiality

(Model

Rule

1.6)

requires

caution

when

inputting

client

data

into

AI

tools;

lawyers

should

ensure

no

confidential

information

is

revealed

without

informed

client

consent

jenkinslaw.org.

Lawyers

should

also

evaluate

whether

to

inform

or

obtain

consent

from

clients

about

AI

use,

especially

if

using

it

in

ways

that

affect

the

representation jenkinslaw.org.

AI

outputs

must

be

independently

verified

for

accuracy

to

fulfill

duties

of

candor

and

avoid

filing

false

or

frivolous

material

(Rules

3.3,

3.1)

jenkinslaw.org.

The

ABA

highlights

that

“hallucinations”

(convincing

but

false

outputs)

are

a

major

pitfall

americanbar.org.

Supervision

duties

(Rules

5.1

and

5.3)

mean

lawyers

must

oversee

both

subordinate

lawyers

and

nonlawyers

and

the

AI

tools

they

use

jenkinslaw.org.

The

opinion

also

warns

that

fees

must

be

reasonable

–

if

AI

improves

efficiency,

lawyers

should

not

overbill

for

time

not

actually

spent kaiserlaw.com.

Overall,

Formal

Op.

512

provides

a

comprehensive

framework

mapping

generative

AI

use

to

existing

ethics

rules

americanbar.org

americanbar.org.

(See

ABA

Formal

Op.

512

jenkinslaw.org

for

full

text.)

State

Bar

Associations

and

Regulatory

Bodies

California

–

“Practical

Guidance”

by

COPRAC

(November

2023)

The

State

Bar

of

California

took

early

action

by

issuing

“Practical

Guidance

for

the

Use

of

Generative

AI

in

the

Practice

of

Law,”

approved

by

the

Bar’s

Board

of

Trustees

on

Nov.

16,

2023

calbar.ca.gov

jdsupra.com.

Rather

than

a

formal

opinion,

it

is

a

guidance

document

(in

chart

format)

developed

by

the

Committee

on

Professional

Responsibility

and

Conduct

(COPRAC).

It

applies

California’s

Rules

of

Professional

Conduct

to

generative

AI

scenarios.

Key

points:

California’s

guidance

stresses

confidentiality

–

attorneys

“must

not

input

any

confidential

client

information”

into

AI

tools

that

lack

adequate

protections

calbar.ca.gov.

Lawyers

should

vet

an

AI

vendor’s

security

and

data

use

policies,

and

anonymize

or

refrain

from

sharing

sensitive

data

unless

certain

it

will

be

protected

calbar.ca.gov

calbar.ca.gov.

The

duty

of

competence

and

diligence

requires

understanding

how

the

AI

works

and

its

limitations jdsupra.com.

Lawyers

should

review

AI

outputs

for

accuracy

and

bias,

and

“AI

should

never

replace

a

lawyer’s

professional

judgment.”

jdsupra.com

If

AI

assists

with

research

or

drafting,

the

attorney

must

critically

review

the

results.

The

guidance

also

addresses

supervision:

firms

should

train

and

supervise

lawyers

and

staff

in

proper

AI

use

jdsupra.com.

Communication

with

clients

may

entail

disclosing

AI

use

in

some

cases

–

e.g.

if

it

materially

affects

the

representation

–

but

California

did

not

mandate

disclosure

in

all

instances

jdsupra.com.

Finally,

the

guidance

notes

candor:

the

duty

of

candor

to

tribunals

means

attorneys

must

check

AI-generated

citations

and

facts

to

avoid

false

statements

in

court

jdsupra.com.

Overall,

California’s

approach

is

to

treat

AI

as

another

technology

that

must

be

used

consistent

with

existing

rules

on

competence,

confidentiality,

supervision,

etc.,

providing

“guiding

principles

rather

than

best

practices”

calbar.ca.gov.

(Source:

State

Bar

of

CA

Generative

AI

Guidance

jdsupra.com

jdsupra.com.)

Florida

–

Advisory

Opinion

24-1

(January

2024)

The

Florida

Bar

issued

Proposed

Advisory

Opinion

24-1

in

late

2023,

which

was

adopted

by

the

Bar’s

Board

of

Governors

in

January

2024

floridabar.org

floridabar.org.

Titled

“Lawyers’

Use

of

Generative

AI,”

this

formal

ethics

opinion

gives

a

green

light

to

using

generative

AI

“to

the

extent

that

the

lawyer

can

reasonably

guarantee

compliance

with

the

lawyer’s

ethical

obligations.” floridabar.org

It

identifies

four

focus

areas:

confidentiality,

oversight,

fees,

and

advertising

hinshawlaw.com

hinshawlaw.com.

Key

points:

Confidentiality:

Florida

stresses

that

protecting

client

confidentiality

(Rule

4-1.6)

is

paramount.

Lawyers

should

take

“reasonable

steps

to

prevent

inadvertent

or

unauthorized

disclosure”

of

client

info

by

an

AI

system

jdsupra.com.

The

opinion

“advisable

to

obtain

a

client’s

informed

consent

before

using

a

third-party

AI

that

would

disclose

confidential

information.”

jdsupra.com

This

aligns

with

prior

cloud-computing

opinions.

Oversight:

Generative

AI

must

be

treated

like

a

non-lawyer

assistant

–

the

lawyer

must

supervise

and

vet

its

work

jdsupra.com.

The

opinion

warns

that

lawyers

relying

on

AI

face

“the

same

perils

as

relying

on

an

overconfident

nonlawyer

assistant”

floridabar.org.

Attorneys

must

review

AI

outputs

(research,

drafts,

etc.)

for

accuracy

and

legal

soundness

before

use

floridabar.org.

Notably,

after

the

infamous

Mata

v.

Avianca

incident

of

fake

cases,

Florida

emphasizes

candor:

no

frivolous

or

false

material

from

AI

should

be

submitted

floridabar.org.

Fees:

Improved

efficiency

from

AI

cannot

be

used

to

charge

inflated

fees.

A

lawyer

“can

ethically

only

charge

a

client

for

actual

costs

incurred”

–

time

saved

by

AI

should

not

be

billed

as

if

the

lawyer

did

the

work

jdsupra.com.

If

a

lawyer

will

charge

for

using

an

AI

tool

(as

a

cost),

the

client

must

be

informed

in

writing

jdsupra.com.

And

training

time

–

a

lawyer’s

time

learning

an

AI

tool

–

cannot

be

billed

to

the

client

jdsupra.com.

Advertising:

If

lawyers

advertise

their

use

of

AI,

they

must

not

be

false

or

misleading.

Florida

specifically

notes

that

if

using

a

chatbot

to

interact

with

potential

clients,

those

users

must

be

told

they

are

interacting

with

an

AI,

not

a

human

lawyer

jdsupra.com.

Any

claims

about

an

AI’s

capabilities

must

be

objectively

verifiable

(no

puffery

that

your

AI

is

“better”

than

others

without

proof)

floridabar.org

floridabar.org.

In

sum,

Florida

concludes:

“a

lawyer

may

ethically

utilize

generative

AI,

but

only

to

the

extent

the

lawyer

can

reasonably

guarantee

compliance

with

duties

of

confidentiality,

candor,

avoiding

frivolous

claims,

truthfulness,

reasonable

fees,

and

proper

advertising.”

floridabar.org.

(Sources:

Florida

Bar

Op.

24-1

floridabar.org

jdsupra.com.)

New

York

State

Bar

Association

–

Task

Force

Report

(April

2024)

The

New

York

State

Bar

Association

(NYSBA)

did

not

issue

a

formal

ethics

opinion

via

its

ethics

committee,

but

its

Task

Force

on

Artificial

Intelligence

produced

a

comprehensive

85-page

report

adopted

by

the

House

of

Delegates

on

April

6,

2024

floridabar.org

floridabar.org.

This

report

includes

a

chapter

on

the

“Ethical

Impact”

of

AI

on

law

practice

floridabar.org,

effectively

providing

guidance

to

NY

lawyers.

It

mirrors

many

concerns

seen

in

formal

opinions

elsewhere.

Key

points:

The

NYSBA

report

underscores

competence

and

cautions

against

“techno-solutionism.”

It

notes

that

“a

refusal

to

use

technology

that

makes

legal

work

more

accurate

and

efficient

may

be

considered

a

refusal

to

provide

competent

representation” nysba.org

nysba.org

–

implying

lawyers

should

stay

current

with

helpful

AI

tools.

At

the

same

time,

it

warns

attorneys

not

to

blindly

trust

AI

as

a

silver

bullet.

The

report

coins

“techno-solutionism”

as

the

overbelief

that

new

tech

(like

gen

AI)

can

solve

all

problems,

reminding

lawyers

that

human

verification

is

still

required

nysba.org

nysba.org.

The

infamous

Avianca

case

is

cited

to

illustrate

the

need

to

verify

AI

outputs

and

supervise

the

“nonlawyer”

tool

(AI)

under

Rule

5.3

nysba.org.

The

report

addresses

the

duty

of

confidentiality

&

privacy

in

depth:

Lawyers

must

ensure

client

information

isn’t

inadvertently

shared

or

used

to

train

public

AI

models

nysba.org

nysba.org.

It

suggests

that

if

AI

tools

store

or

learn

from

inputs,

that

raises

confidentiality

concerns

nysba.org.

Client

consent

or

use

of

secure

“closed”

AI

systems

may

be

needed

to

protect

privileged

data.

The

report

also

covers

supervision

(Rule

5.3)

–

attorneys

should

supervise

AI

use

similarly

to

how

they

supervise

human

assistants

nysba.org.

It

touches

on

bias

and

fairness,

noting

generative

AI

trained

on

biased

data

could

perpetuate

discrimination,

which

lawyers

must

guard

against

lawnext.com.

Interestingly,

the

NYSBA

guidance

also

links

AI

use

to

reasonable

fees:

it

suggests

effective

use

of

AI

can

factor

into

whether

a

fee

is

reasonable

jdsupra.com

jdsupra.com

(e.g.

inefficiently

refusing

to

use

available

AI

might

waste

client

money,

whereas

using

AI

and

still

charging

full

hours

might

be

unreasonable).

In

sum,

New

York’s

bar

leaders

affirm

that

ethical

duties

of

competence,

confidentiality,

and

supervision

fully

apply

to

AI.

They

encourage

using

AI’s

benefits

to

improve

service,

but

caution

against

its

risks

and

urge

ongoing

attorney

oversight

floridabar.org

floridabar.org.

(Sources:

NYSBA

Task

Force

Report

nysba.org

nysba.org.)

New

York

City

Bar

Association

–

Formal

Opinion

2024-5

(August

2024)

The

New

York

City

Bar

Association

Committee

on

Professional

Ethics

issued

Formal

Ethics

Opinion

2024-5

on

August

7,

2024

nydailyrecord.com

nydailyrecord.com.

This

opinion,

in

a

user-friendly

chart

format,

provides

practical

guidelines

for

NYC

lawyers

on

generative

AI.

The

Committee

explicitly

aimed

to

give

“guardrails

and

not

hard-and-fast

restrictions”

in

this

evolving

area

nydailyrecord.com.

Key

points:

Confidentiality:

The

NYC

Bar

draws

a

distinction

between

“closed”

AI

systems

(e.g.

an

in-house

or

vendor

tool

that

does

not

share

data

externally)

and

public

AI

services

like

ChatGPT.

If

using

an

AI

that

stores

or

shares

inputs

outside

the

firm,

client

informed

consent

is

required

before

inputting

any

confidential

information

nydailyrecord.com.

Even

with

closed/internal

AI,

lawyers

must

maintain

internal

confidentiality

protections.

The

opinion

warns

lawyers

to

review

AI

Terms

of

Use

regularly

to

ensure

the

provider

isn’t

using

or

exposing

client

data

without

consent nydailyrecord.com.

Competence:

Echoing

others,

NYC

advises

that

lawyers

“understand

to

a

reasonable

degree

how

the

technology

works,

its

limitations,

and

the

applicable

Terms

of

Use”

before

using

generative

AI

nydailyrecord.com.

Attorneys

should

avoid

delegating

their

professional

judgment

to

AI;

any

AI

output

is

just

a

starting

point

or

draft

nydailyrecord.com.

Lawyers

must

ensure

outputs

are

accurate

and

tailored

to

the

client’s

needs

–

essentially,

verify

everything

and

edit

AI-generated

material

so

that

it

truly

serves

the

client’s

interests

nydailyrecord.com.

Supervision:

Firms

should

implement

policies

and

training

for

lawyers

and

staff

on

acceptable

AI

use

nydailyrecord.com.

The

Committee

notes

that

client

intake

chatbots

(if

used

on

a

firm’s

website,

for

example)

require

special

oversight

to

avoid

inadvertently

forming

attorney-client

relationships

or

giving

legal

advice

without

proper

vetting

nydailyrecord.com.

In

other

words,

a

chatbot

interacting

with

the

public

should

be

carefully

monitored

by

lawyers

to

ensure

it

doesn’t

mislead

users

about

its

nature

or

create

unintended

obligations

nydailyrecord.com.

The

NYC

Bar’s

guidance

aligns

with

California’s

in

format

and

substance,

reinforcing

that

the

core

duties

of

confidentiality,

competence

(tech

proficiency),

and

supervision

all

apply

when

lawyers

use

generative

AI

tools

nydailyrecord.com

nydailyrecord.com.

(Source:

NYC

Bar

Formal

Op.

2024-5nydailyrecord.com

nydailyrecord.com.)

New

Jersey

Supreme

Court

–

Preliminary

Guidelines

(January

2024)

In

New

Jersey,

the

state’s

highest

court

itself

weighed

in.

On

January

24,

2024,

the

New

Jersey

Supreme

Court’s

Committee

on

AI

and

the

Courts

issued

“Preliminary

Guidelines

on

the

Use

of

AI

by

New

Jersey

Lawyers,”

which

were

published

as

a

Notice

to

the

Bar

njcourts.gov

njcourts.gov.

These

guidelines,

effective

immediately,

aim

to

help

NJ

lawyers

comply

with

existing

Rules

of

Professional

Conduct

when

using

generative

AI

njcourts.gov.

Key

points:

The

Court

made

clear

that

AI

does

not

change

lawyers’

fundamental

duties.

Any

use

of

AI

“must

be

employed

with

the

same

commitment

to

diligence,

confidentiality,

honesty,

and

client

advocacy

as

traditional

methods

of

practice.”

njcourts.gov

In

other

words,

tech

advances

do

not

dilute

responsibilities.

The

NJ

guidelines

highlight

accuracy

and

truthfulness:

lawyers

have

an

ethical

duty

to

ensure

their

work

is

accurate,

so

they

must

always

check

AI-generated

content

for

“hallucinations”

or

errors

before

relying

on

it

jdsupra.com.

Submitting

false

or

fake

information

generated

by

AI

would

violate

rules

against

misrepresentations

to

the

court.

The

guidelines

reiterate

candor

to

tribunals

–

attorneys

must

not

present

AI-produced

output

containing

fabricated

cases

or

facts

(the

Mata/Avianca

situation

is

alluded

to)jdsupra.com.

Regarding

communication

and

client

consent,

NJ

took

a

measured

approach:

There

is

“no

per

se

requirement

to

inform

a

client”

about

every

AI

use,

unless

not

telling

the

client

would

prevent

the

client

from

making

informed

decisions

about

the

representation

jdsupra.com.

For

example,

if

AI

is

used

in

a

trivial

manner

(typo

correction,

formatting),

disclosure

isn’t

required;

but

if

it’s

used

in

substantive

tasks

that

affect

the

case,

lawyers

should

consider

informing

the

client,

especially

if

there’s

heightened

risk.

Confidentiality:

Lawyers

must

ensure

any

AI

tool

is

secure

to

avoid

inadvertent

disclosures

of

client

info

jdsupra.com.

This

echoes

the

duty

to

use

“reasonable

efforts”

to

safeguard

confidential

data

(RPC

1.6).

No

misconduct:

The

Court

reminds

that

all

rules

on

attorney

misconduct

(dishonesty,

fraud,

bias,

etc.)

apply

in

AI

usage

jdsupra.com.

For

instance,

using

AI

in

a

way

that

produces

discriminatory

outcomes

or

that

frustrates

justice

would

breach

Rule

8.4.

Supervision:

Law

firms

must

supervise

how

their

lawyers

and

staff

use

AI

jdsupra.com

–

establishing

internal

policies

to

ensure

ethical

use.

Overall,

New

Jersey’s

top

court

signaled

that

it

embraces

innovation

(noting

AI’s

potential

benefits)

but

insists

lawyers

“balance

the

benefits

of

innovation

while

safeguarding

against

misuse.”

njcourts.gov

(Sources:

NJ

Supreme

Court

Guidelines

jdsupra.com

jdsupra.com.)

Pennsylvania

&

Philadelphia

Bars

–

Joint

Opinion

2024-200

(June

2024)

The

Pennsylvania

Bar

Association

(PBA)

and

Philadelphia

Bar

Association

jointly

issued

Formal

Opinion

2024-200

in

mid-2024

lawnext.com

lawnext.com.

This

collaborative

opinion

(“Joint

Formal

Op.

2024-200”)

provides

ethical

guidance

for

Pennsylvania

lawyers

using

generative

AI.

It

repeatedly

emphasizes

that

the

same

rules

apply

to

AI

as

to

any

technology

lawnext.com.

Key

points:

The

joint

opinion

places

heavy

emphasis

on

competence

(Rule

1.1).

It

famously

states

“Lawyers

must

be

proficient

in

using

technological

tools

to

the

same

extent

they

are

in

traditional

methods”

lawnext.com.

In

other

words,

attorneys

should

treat

AI

as

part

of

the

competence

duty

–

understanding

e-discovery

software,

legal

research

databases,

and

now

generative

AI,

is

part

of

being

a

competent

lawyer

lawnext.com.

The

opinion

acknowledges

generative

AI’s

unique

risk:

it

can

hallucinate

(generate

false

citations

or

facts)

lawnext.com.

Thus,

due

diligence

is

required

–

lawyers

must

verify

all

AI

outputs,

especially

legal

research

results

and

citations

lawnext.com lawnext.com.

The

opinion

bluntly

warns

that

if

you

ask

AI

for

cases

and

“then

file

them

in

court

without

even

bothering

to

read

or

Shepardize

them,

that

is

stupid.”

lawnext.com

(The

opinion

uses

more

polite

language,

but

this

captures

the

spirit.)

It

highlights

bias

as

well:

AI

may

carry

implicit

biases

from

training

data,

so

lawyers

should

be

alert

to

any

discriminatory

or

skewed

content

in

AI

output

lawnext.com.

The

Pennsylvania/Philly

opinion

also

advises

lawyers

to

communicate

with

clients

about

AI

use.

Specifically,

lawyers

should

be

transparent

and

“provide

clear,

transparent

explanations”

of

how

AI

is

being

used

in

the

case

lawnext.com

lawnext.com.

In

some

situations,

obtaining

client

consent

before

using

certain

AI

tools

is

recommended

lawnext.com

lawnext.com

–

e.g.,

if

the

tool

will

handle

confidential

information

or

significantly

shape

the

legal

work.

The

opinion

lays

out

“12

Points

of

Responsibility”

for

using

gen

AI

lawnext.com

lawnext.com,

which

include

many

of

the

above:

ensure

truthfulness

and

accuracy

of

AI-derived

content,

double-check

citations,

maintain

confidentiality

(ensure

AI

vendors

keep

data

secure)

lawnext.com,

check

for

conflicts

(make

sure

use

of

AI

doesn’t

introduce

any

conflict

of

interest)

lawnext.com,

and

transparency

with

clients,

courts,

and

colleagues

about

AI

use

and

its

limitations

lawnext.com.

It

also

addresses

proper

billing

practices:

lawyers

shouldn’t

overcharge

when

AI

boosts

efficiency

lawnext.com.

If

AI

saves

time,

the

lawyer

should

not

bill

as

if

they

did

it

manually

–

they

may

bill

for

the

actual

time

or

consider

value-based

fees,

but

padding

hours

violates

the

rule

on

reasonable

fees

lawnext.com.

Overall,

the

Pennsylvania

and

Philly

bars

take

the

stance

that

embracing

AI

is

fine

—

even

beneficial

—

as

long

as

lawyers

“remain

fully

accountable

for

the

results,”

use

AI

carefully,

and

don’t

neglect

any

ethical

duty

in

the

process

lawnext.com

lawnext.com.

(Sources:

Joint

PBA/Phila.

Opinion

2024-200

summarized

by

Ambrogi

lawnext.com

lawnext.com.)

Kentucky

–

Ethics

Opinion

KBA

E-457

(March

2024)

The

Kentucky

Bar

Association

issued

Ethics

Opinion

KBA

E-457,

“The

Ethical

Use

of

Artificial

Intelligence

in

the

Practice

of

Law,”

on

March

15,

2024

cdn.ymaws.com.

This

formal

opinion

(finalized

after

a

comment

period

in

mid-2024)

provides

a

nuanced

roadmap

for

Kentucky

lawyers.

It

not

only

answers

basic

questions

but

also

offers

broader

insight,

reflecting

the

work

of

a

KBA

Task

Force

on

AI

techlawcrossroads.com.

Key

points:

Competence:

Like

other

jurisdictions,

Kentucky

affirms

that

keeping

abreast

of

technology

(including

AI)

is

a

mandatory

aspect

of

competence

techlawcrossroads.com

techlawcrossroads.com.

Kentucky’s

Rule

1.1

Comment

6

(equivalent

to

ABA

Comment

8)

says

lawyers

“should

keep

abreast

of

…

the

benefits

and

risks

associated

with

relevant

technology.”

The

opinion

stresses

this

is

not

optional:

“It’s

not

a

‘should’;

it’s

a

must.”

techlawcrossroads.com

Lawyers

cannot

ethically

ignore

AI’s

existence

or

potential

in

law

practice

techlawcrossroads.com

techlawcrossroads.com

(implying

that

failing

to

understand

how

AI

might

improve

service

could

itself

be

a

lapse

in

competence).

Disclosure

to

clients:

Kentucky

takes

a

practical

stance

that

there

is

“no

duty

to

disclose

to

the

client

the

‘rote’

use

of

AI

generated

research,”

absent

special

circumstances

techlawcrossroads.com.

If

an

attorney

is

just

using

AI

as

a

tool

(like

one

might

use

Westlaw

or

a

spell-checker),

they

generally

need

not

inform

the

client.

However,

there

are

important

exceptions

–

if

the

client

has

specifically

limited

use

of

AI,

or

if

use

of

AI

presents

significant

risk

or

would

require

client

consent

under

the

rules,

then

disclosure

is

needed

techlawcrossroads.com.

Lawyers

should

discuss

risks

and

benefits

of

AI

with

clients

if

client

consent

is

required

for

its

use

(for

example,

if

AI

will

process

confidential

data,

informed

consent

may

be

wise)

techlawcrossroads.com.

Fees:

KBA

E-457

is

very

direct

about

fees

and

AI.

If

AI

significantly

reduces

the

time

spent

on

a

matter,

the

lawyer

may

need

to

reduce

their

fees

accordingly

techlawcrossroads.com.

A

lawyer

cannot

charge

a

client

as

if

a

task

took

5

hours

if

AI

allowed

it

to

be

done

in

1

hour

–

that

would

make

the

fee

unreasonable.

The

opinion

also

says

a

lawyer

can

only

charge

a

client

for

the

expense

of

using

AI

(e.g.,

the

cost

of

a

paid

AI

service)

if

the

client

agrees

to

that

fee

in

writing

techlawcrossroads.com.

Otherwise,

passing

along

AI

tool

costs

may

be

impermissible.

In

short,

AI’s

efficiencies

should

benefit

clients,

not

become

a

hidden

profit

center.

Confidentiality:

Lawyers

have

a

“continuing

duty

to

safeguard

client

information

if

they

use

AI,”

and

must

comply

with

all

applicable

court

rules

on

AI

use

techlawcrossroads.com.

This

means

vetting

AI

providers’

security

and

ensuring

no

confidential

data

is

exposed.

Kentucky

echoes

that

attorneys

must

understand

the

terms

and

operation

of

any

third-party

AI

system

they

use

techlawcrossroads.com.

They

should

know

how

the

AI

service

stores

and

uses

data.

Court

rules

compliance:

Notably,

the

opinion

reminds

lawyers

to

follow

any

court-imposed

rules

about

AI

(for

instance,

if

a

court

requires

disclosure

of

AI-drafted

filings,

the

lawyer

must

do

so)

cdn.ymaws.com.

Firm

policies

and

training:

KBA

E-457

advises

law

firms

to

create

informed

policies

on

AI

use

and

to

supervise

those

they

manage

in

following

these

policies

techlawcrossroads.com.

In

summary,

Kentucky’s

opinion

encourages

lawyers

to

embrace

AI’s

potential

but

to

do

so

carefully:

stay

competent

with

the

technology,

be

transparent

when

needed,

adjust

fees

fairly,

protect

confidentiality,

and

always

maintain

ultimate

responsibility

for

the

work.

It

concludes

that

Kentucky

lawyers

“cannot

run

from

or

ignore

AI.”

techlawcrossroads.com

(Source:

KBA

E-457

(2024)

via

TechLaw

Crossroads

summary

techlawcrossroads.com

techlawcrossroads.com.)

North

Carolina

–

Formal

Ethics

Opinion

2024-1

(November

2024)

The

North

Carolina

State

Bar

adopted

2024

Formal

Ethics

Opinion

1,

“Use

of

Artificial

Intelligence

in

a

Law

Practice,”

on

November

1,

2024

ncbar.gov

ncbar.gov.

This

opinion

squarely

addresses

whether

and

how

NC

lawyers

can

use

AI

tools

consistent

with

their

ethical

duties.

Key

points:

The

NC

State

Bar

gives

a

cautious

“Yes”

to

using

AI,

under

specific

conditions:

“Yes,

provided

the

lawyer

uses

any

AI

program,

tool,

or

resource

competently,

securely

to

protect

client

confidentiality,

and

with

proper

supervision

when

relying

on

the

AI’s

work

product.”

ncbar.gov.

That

single

sentence

captures

the

three

pillars

of

NC’s

guidance:

competence,

confidentiality,

and

supervision.

NC

acknowledges

that

nothing

in

the

Rules

explicitly

prohibits

AI

use

ncbar.gov,

so

it

comes

down

to

applying

existing

rules.

Competence:

Lawyers

must

understand

the

technology

sufficiently

to

use

it

effectively

and

safely

ncbar.gov.

Rule

1.1

and

its

Comment

in

NC

(which,

like

the

ABA,

includes

tech

competence)

require

lawyers

to

know

what

they

don’t

know

–

if

a

lawyer

isn’t

competent

with

an

AI

tool,

they

must

get

up

to

speed

or

refrain.

NC

emphasizes

that

using

AI

is

often

the

lawyer’s

own

decision

but

it

must

be

made

prudently,

considering

factors

like

the

tool’s

reliability

and

cost-benefit

for

the

client

ncbar.gov

ncbar.gov.

Confidentiality

&

Security:

Rule

1.6(c)

in

North

Carolina

obligates

lawyers

to

take

reasonable

efforts

to

prevent

unauthorized

disclosure

of

client

info.

So,

before

using

any

cloud-based

or

third-party

AI,

the

lawyer

must

ensure

it

is

“sufficiently

secure

and

compatible

with

the

lawyer’s

confidentiality

obligations.”

ncbar.gov

ncbar.gov.

The

opinion

suggests

attorneys

evaluate

providers

like

they

would

any

vendor

handling

client

data

–

e.g.,

examine

terms

of

service,

data

storage

policies,

etc.,

similar

to

prior

NC

guidance

on

cloud

computing

ncbar.gov

ncbar.gov.

If

the

AI

is

“self-learning”

(using

inputs

to

improve

itself),

lawyers

should

be

wary

that

client

data

might

later

resurface

to

others

ncbar.gov.

NC

stops

short

of

mandating

client

consent

for

AI

use,

but

it

implies

that

if

an

AI

tool

can’t

be

used

consistent

with

confidentiality,

then

either

don’t

use

it

or

get

client

permission.

Supervision

and

Independent

Judgment:

NC

treats

AI

output

like

work

by

a

nonlawyer

assistant.

Under

Rule

5.3,

lawyers

must

supervise

the

use

of

AI

tools

and

“exercise

independent

professional

judgment

in

determining

how

(or

if)

to

use

the

product

of

an

AI

tool”

for

a

client

ncbar.gov

ncbar.gov.

This

means

a

lawyer

cannot

blindly

accept

an

AI’s

result

–

they

must

review

and

verify

it

before

relying

on

it.

If

an

AI

drafts

a

contract

or

brief,

the

lawyer

is

responsible

for

editing

and

ensuring

it’s

correct

and

appropriate.

NC

explicitly

analogizes

AI

to

both

other

software

and

to

nonlawyer

staff:

AI

is

“between”

a

software

tool

and

a

nonlawyer

assistant

in

how

we

think

of

it

ncbar.gov.

Thus,

the

lawyer

must

both

know

how

to

use

the

software

and

supervise

its

output

as

if

it

were

a

junior

employee’s

work.

Bottom

line:

NC

FO

2024-1

concludes

that

a

lawyer

may

use

AI

in

practice

–

for

tasks

like

document

review,

legal

research,

drafting,

etc.

–

as

long

as

the

lawyer

remains

fully

responsible

for

the

outcome

ncbar.gov

ncbar.gov.

The

opinion

purposefully

doesn’t

dictate

when

AI

is

appropriate

or

not,

recognizing

the

technology

is

evolving

ncbar.gov.

But

it

clearly

states

that

if

a

lawyer

decides

to

employ

AI,

they

are

“fully

responsible”

for

its

use

and

must

ensure

it

is

competent

use,

confidential

use,

and

supervised

use

ncbar.gov

ncbar.gov.

(Source:

NC

2024

FEO-1ncbar.gov

ncbar.gov.)

Texas

–

Proposed

Opinion

2024-6

(Draft,

November

2024)

The

State

Bar

of

Texas

Professional

Ethics

Committee

has

circulated

a

Proposed

Ethics

Opinion

No.

2024-6

(posted

for

public

comment

on

Nov.

19,

2024)

regarding

lawyers’

use

of

generative

AI texasbar.com.

(As

of

this

writing,

it

is

a

draft

opinion

awaiting

final

adoption.)

This

Texas

draft

provides

a

“high-level

overview”

of

ethical

issues

raised

by

AI,

requested

by

a

Bar

task

force

on

AI texasbar.com.

Key

points

(draft):

The

proposed

Texas

opinion

covers

familiar

ground.

It

notes

the

duty

of

competence

(Rule

1.01)

extends

to

understanding

relevant

technology

texasbar.com.

Texas

specifically

cites

its

prior

ethics

opinions

on

cloud

computing

and

metadata,

which

required

lawyers

to

have

a

“reasonable

and

current

understanding”

of

those

technologies

texasbar.com

texasbar.com.

By

analogy,

any

Texas

lawyer

using

generative

AI

“must

have

a

reasonable

and

current

understanding

of

the

technology”

and

its

capabilities

and

limits

texasbar.com.

In

practical

terms,

this

means

lawyers

should

educate

themselves

on

how

tools

like

ChatGPT

actually

work

(e.g.

that

they

predict

text

rather

than

retrieve

vetted

sources)

and

what

their

known

pitfalls

are

texasbar.com.

The

draft

opinion

spends

time

describing

Mata

v.

Avianca

to

illustrate

the

dangers

of

not

understanding

AI’s

lack

of

a

reliable

legal

database

texasbar.com

texasbar.com.

On

confidentiality

(Rule

1.05

in

Texas),

the

opinion

again

builds

on

prior

guidance:

lawyers

must

safeguard

client

information

when

using

any

third-party

service

texasbar.com

texasbar.com.

It

suggests

precautions

similar

to

those

for

cloud

storage:

“acquire

a

general

understanding

of

how

the

technology

works;

review

(and

potentially

renegotiate)

the

Terms

of

Service;

[ensure]

the

provider

will

keep

data

confidential;

and

stay

vigilant

about

data

security.”

texasbar.com.

(These

examples

are

drawn

from

Texas

Ethics

Op.

680

on

cloud

computing,

which

the

AI

opinion

heavily

references.)

If

an

AI

tool

cannot

be

used

in

a

way

that

protects

confidential

info,

the

lawyer

should

not

use

it

for

those

purposes.

The

Texas

draft

also

flags

duty

to

avoid

frivolous

submissions

(Rule

3.01)

and

duty

of

candor

to

tribunal

(Rule

3.03)

as

directly

relevant

texasbar.com.

Using

AI

doesn’t

excuse

a

lawyer

from

these

obligations

–

citing

fake

cases

or

making

false

statements

is

no

less

an

ethical

violation

because

an

AI

generated

them.

Lawyers

must

thoroughly

vet

AI-generated

legal

research

and

content

to

ensure

it’s

grounded

in

real

law

and

facts

texasbar.com

texasbar.com.

The

opinion

essentially

says:

if

you

choose

to

use

AI,

you

must

double-check

its

work

just

as

you

would

a

junior

lawyer’s

memo

or

a

nonlawyer

assistant’s

draft.

Supervision

(Rules

5.01,

5.03):

Supervising

partners

should

have

firm-wide

measures

so

that

any

use

of

AI

by

their

team

is

ethical

texasbar.com

texasbar.com.

This

could

mean

creating

policies

on

approved

AI

tools

and

requiring

verification

of

AI

outputs.

In

summary,

the

Texas

proposed

opinion

doesn’t

ban

generative

AI;

it

provides

a

“snapshot”

of

issues

and

reinforces

that

core

duties

of

competence,

confidentiality,

candor,

and

supervision

must

guide

any

use

of

AI

in

practice

texasbar.com

texasbar.com.

(The

committee

acknowledges

the

AI

landscape

is

rapidly

changing,

so

they

focused

on

broad

principles

rather

than

specifics

that

might

soon

be

outdated

texasbar.com.)

Once

finalized,

Texas’s

opinion

will

likely

align

with

the

consensus:

lawyers

can

harness

AI’s

benefits

if

they

remain

careful

and

accountable.

(Source:

Texas

Proposed

Op.

2024-6

texasbar.com

texasbar.com.)

Virginia

State

Bar

–

AI

Guidance

Update

(August

2024)

In

2024

the

Virginia

State

Bar

released

a

short

set

of

guidelines

on

generative

AI

as

an

update

on

its

website

(around

August

2024)

nydailyrecord.com.

This

concise

guidance

stands

out

for

its

practicality

and

flexibility.

Rather

than

an

extensive

opinion,

Virginia

issued

overarching

advice

that

can

adapt

as

AI

technology

evolves

nydailyrecord.com.

Key

points:

Virginia

first

emphasizes

that

lawyers’

basic

ethical

responsibilities

“have

not

changed”

due

to

AI,

and

that

generative

AI

presents

issues

“fundamentally

similar”

to

those

with

other

technology

or

with

supervising

people

nydailyrecord.com.

This

frames

the

guidance:

existing

rules

suffice.

On

confidentiality,

the

Bar

advises

lawyers

to

vet

how

AI

providers

handle

data

just

as

they

would

with

any

vendor

nydailyrecord.com

nydailyrecord.com

.

Legal-specific

AI

products

(designed

for

lawyers,

with

better

data

security)

may

offer

more

protection,

but

even

then

attorneys

“must

make

reasonable

efforts

to

assess”

the

security

and

“whether

and

under

what

circumstances”

confidential

info

could

be

exposed

nydailyrecord.com.

In

other

words,

even

if

using

an

AI

tool

marketed

as

secure

for

lawyers,

you

should

confirm

that

it

truly

keeps

your

client’s

data

confidential

(no

sharing

or

training

on

it

without

consent)

nydailyrecord.com

nydailyrecord.com.

Virginia

notably

aligns

with

most

jurisdictions

(and

diverges

from

a

stricter

ABA

stance)

regarding

client

consent:

“there

is

no

per

se

requirement

to

inform

a

client

about

the

use

of

generative

AI

in

their

matter”

nydailyrecord.com.

Unless

something

about

the

AI

use

would

necessitate

client

disclosure

(e.g.,

an

agreement

with

the

client,

or

an

unusual

risk

like

using

a

very

public

AI

for

sensitive

info),

lawyers

generally

need

not

obtain

consent

for

routine

AI

use

nydailyrecord.com.

This

is

consistent

with

the

idea

that

using

AI

can

be

like

using

any

software

tool

behind

the

scenes.

Next,

supervision

and

verification:

The

bar

stresses

that

lawyers

must

review

all

AI

outputs

as

they

would

work

done

by

a

junior

attorney

or

nonlawyer

assistant

nydailyrecord.com

nydailyrecord.com.

Specifically,

“verify

that

any

citations

are

accurate

(and

real)”

and

generally

ensure

the

AI’s

work

product

is

correct

nydailyrecord.com.

This

duty

extends

to

supervising

others

in

the

firm

–

if

a

paralegal

or

associate

uses

AI,

the

responsible

lawyer

must

ensure

they

are

doing

so

properly nydailyrecord.com.

On

fees

and

billing,

Virginia

takes

a

clear

stance:

a

lawyer

may

not

bill

a

client

for

time

not

actually

spent

due

to

AI

efficiency

gains

nydailyrecord.com.

“A

lawyer

may

not

charge

an

hourly

fee

in

excess

of

the

time

actually

spent

…

and

may

not

bill

for

time

saved

by

using

generative

AI.”

nydailyrecord.com

If

AI

cuts

a

research

task

from

5

hours

to

1,

you

can’t

still

charge

5

hours.

The

Bar

suggests

considering

alternative

fee

arrangements

to

account

for

AI’s

value,

instead

of

hourly

billing

windfalls

nydailyrecord.com.

As

for

passing

along

AI

tool

costs:

the

Bar

says

you

can’t

charge

the

client

for

your

AI

subscription

or

usage

unless

it’s

a

reasonable

charge

and

permitted

by

the

fee

agreement

nydailyrecord.com.

Finally,

Virginia

reminds

lawyers

to

stay

aware

of

any

court

rules

about

AI.

Some

courts

(even

outside

Virginia)

have

begun

requiring

attorneys

to

certify

that

filings

were

checked

for

AI-generated

falsehoods,

or

even

prohibiting

AI-drafted

documents

absent

verification.

Virginia’s

guidance

highlights

that

lawyers

must

comply

with

any

such

disclosure

or

anti-AI

rules

in

whatever

jurisdiction

they

are

in

nydailyrecord.com

nydailyrecord.com.

Overall,

the

Virginia

State

Bar’s

message

is:

use

common

sense

and

existing

rules.

Be

transparent

when

needed,

protect

confidentiality,

supervise

and

double-check

AI

outputs,

bill

fairly,

and

follow

any

new

court

requirements

nydailyrecord.com

nydailyrecord.com.

This

short-form

guidance

was

praised

for

being

“streamlined”

and

adaptable

as

AI

tools

continue

to

change

nydailyrecord.com.

(Source:

Virginia

State

Bar

AI

Guidance

via

N.Y.

Daily

Record

nydailyrecord.com

nydailyrecord.com.)

District

of

Columbia

Bar

–

Ethics

Opinion

388

(September

2024)

The

D.C.

Bar

issued

Ethics

Opinion

388:

“Attorneys’

Use

of

Generative

AI

in

Client

Matters”

in

2024

(the

second

half

of

the

year)

kaiserlaw.com.

This

opinion

closely

analyzes

the

ethical

implications

of

lawyers

using

gen

AI,

using

the

well-known

Mata

v.

Avianca

incident

as

a

teaching

example

kaiserlaw.com

kaiserlaw.com

.

It

then

organizes

guidance

under

specific

D.C.

Rules

of

Professional

Conduct.

Key

points:

The

opinion

breaks

its

analysis

into

categories

of

duties

kaiserlaw.com

kaiserlaw.com:

-

Competence

(Rule

1.1):

D.C.

reiterates

that

tech

competence

is

part

of

a

lawyer’s

duty.

Attorneys

must

“keep

abreast

of

…

practice

[changes],

including

the

benefits

and

risks

of

relevant

technology.”

kaiserlaw.com

Before

using

AI,

lawyers

should

understand

how

it

works,

what

it

does,

and

its

potential

dangers

kaiserlaw.com

kaiserlaw.com.

The

opinion

vividly

quotes

a

description

of

AI

as

“an

omniscient,

eager-to-please

intern

who

sometimes

lies

to

you.”

kaiserlaw.com

kaiserlaw.com

In

practical

terms,

D.C.

lawyers

must

know

that

AI

output

can

be

very

convincing

but

incorrect.

The

Mata/Avianca

saga

–

where

a

lawyer

unknowingly

relied

on

a

tool

that

“sometimes

lies”

–

underscores

the

need

for

knowledge

and

caution

dcbar.org

dcbar.org. -

Confidentiality

(Rule

1.6):

D.C.’s

Rule

1.6(f)

specifically

requires

lawyers

to

prevent

unauthorized

use

of

client

info

by

third-party

service

providers

kaiserlaw.com

kaiserlaw.com.

This

applies

to

AI

providers.

Lawyers

are

instructed

to

ask

themselves:

“Will

information

I

provide

[to

the

AI]

be

visible

to

the

AI

provider

or

others?

Will

my

input

affect

future

answers

for

other

users

(potentially

revealing

my

data)?”

kaiserlaw.com

kaiserlaw.com.

If

using

an

AI

tool

that

sends

data

to

an

external

server,

the

lawyer

must

ensure

that

data

is

protected.

D.C.

likely

would

advise

using

privacy-protective

settings

or

choosing

tools

that

allow

opt-outs

of

data

sharing,

or

obtaining

client

consent

if

needed.

Essentially,

treat

AI

like

any

outside

vendor

under

Rule

5.3/1.6:

do

due

diligence

to

ensure

confidentiality

is

preserved

kaiserlaw.com

kaiserlaw.com. -

Supervision

(Rules

5.1

&

5.3):

A

lawyer

must

supervise

both

other

lawyers

and

nonlawyers

in

the

firm

regarding

AI

use

kaiserlaw.com

kaiserlaw.com.

This

may

entail

firm

policies:

e.g.,

vetting

which

AI

tools

are

approved

and

training

staff

to

verify

AI

output

for

accuracy

kaiserlaw.com

kaiserlaw.com.

If

a

subordinate

attorney

or

paralegal

uses

AI,

the

supervising

attorney

should

reasonably

ensure

they

are

doing

so

in

compliance

with

all

ethical

duties

(and

correcting

any

mistakes).

The

opinion

views

AI

as

an

extension

of

one’s

team

–

requiring

oversight. -

Candor

to

Tribunal

&

Fairness

(Rules

3.3

and

3.4):

Simply

put,

a

lawyer

cannot

make

false